Researchers develop a super-hierarchical and explanatory analysis of magnetization reversal that could improve the reliability of spintronics devices.

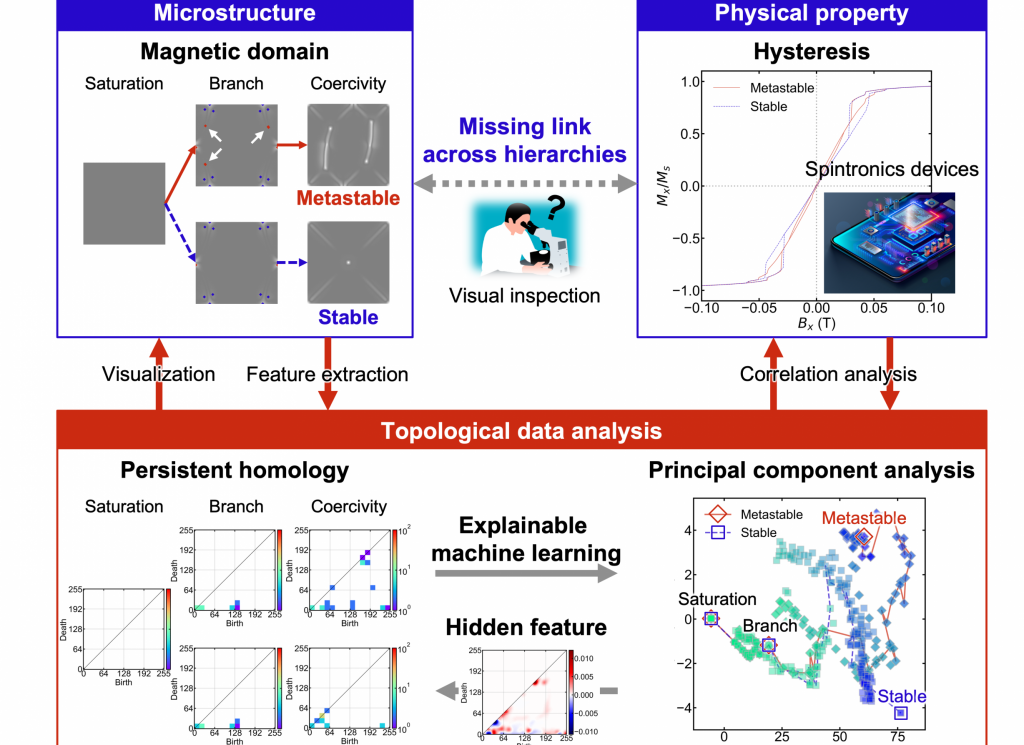

The reliability of data storage and writing speed in advanced magnetic devices depend on drastic, complex changes in microscopic magnetic domain structures. However, it is extremely challenging to quantify these changes, limiting our understanding of magnetic phenomena. To tackle this, researchers from Japan developed, using machine learning and topology, an analysis method that quantifies the complexity of the magnetic domain structures, revealing hidden features of magnetization reversal that are hardly seen by human eyes. Spintronic devices and their operation are governed by the microstructures of magnetic domains. These magnetic domain structures undergo complex, drastic changes when an external magnetic field is applied to the system. The resulting fine structures are not reproducible, and it is challenging to quantify the complexity of magnetic domain structures. Our understanding of the magnetization reversal phenomenon is, thus, limited to crude visual inspections and qualitative methods, representing a severe bottleneck in material design. It has been difficult to even predict the stability and shape of the magnetic domain structures in Permalloy, which is a well-known material studied over a century.

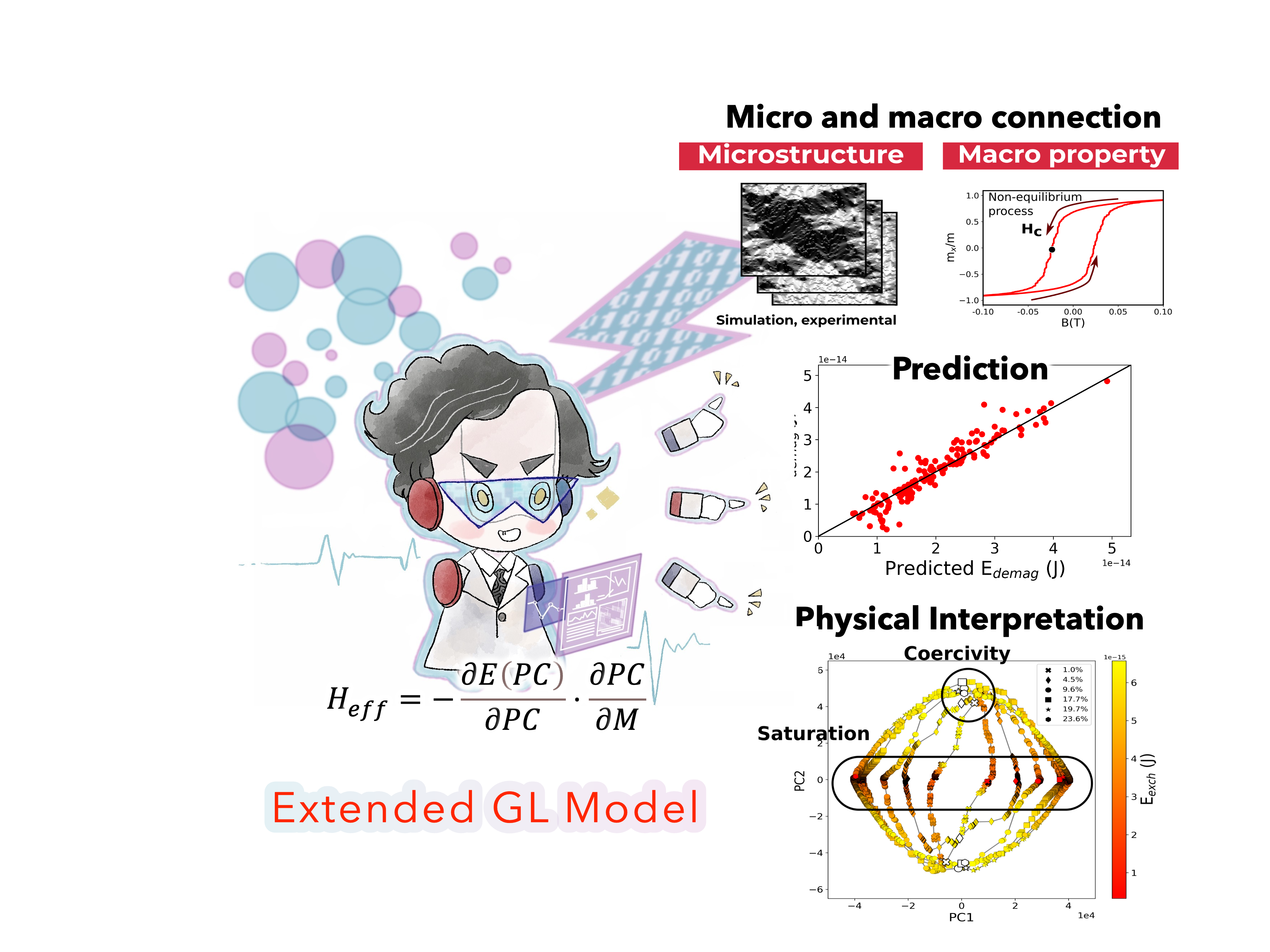

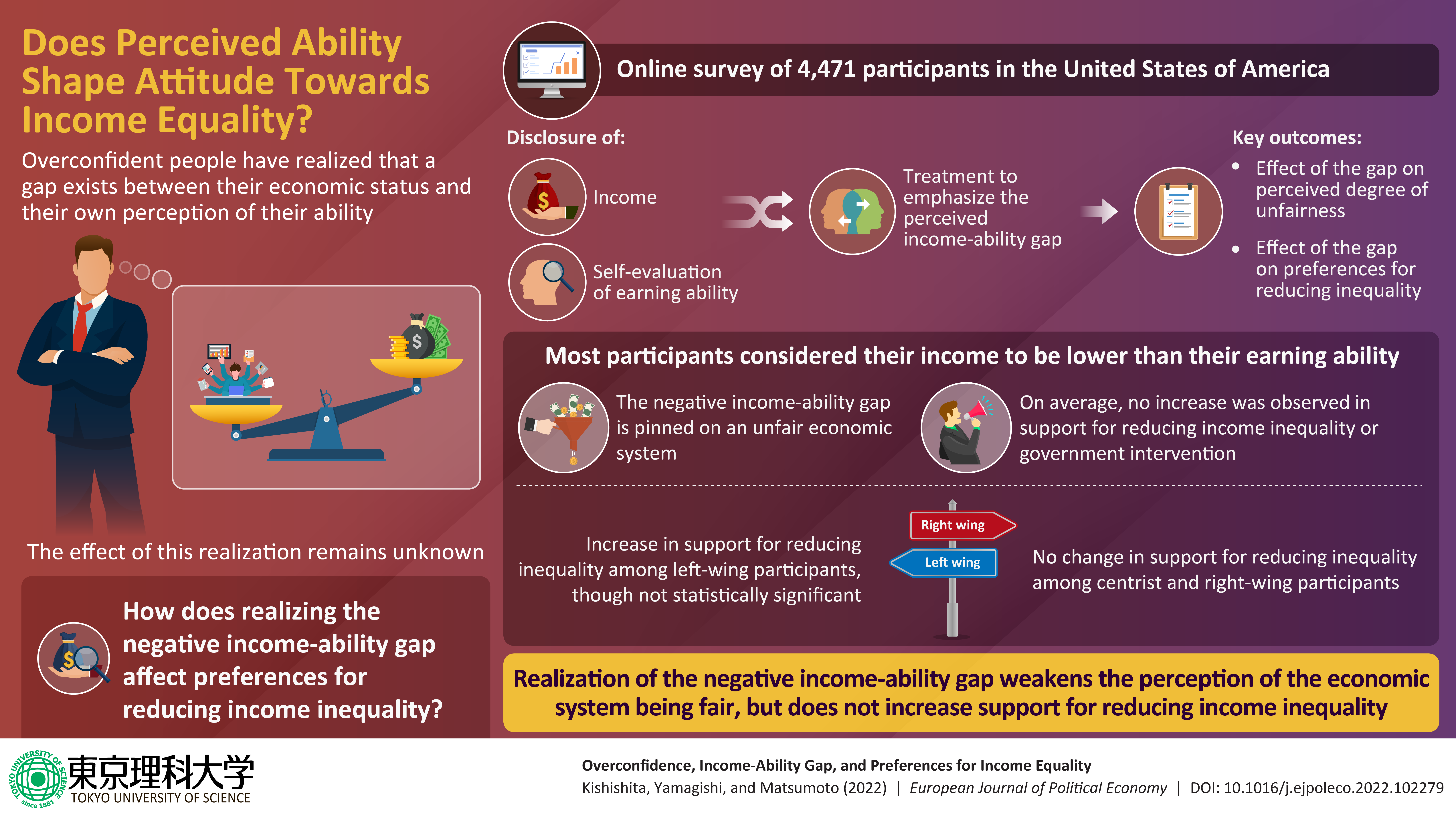

Addressing this issue, a team of researchers headed by Professor Masato Kotsugi from Tokyo University of Science, Japan, recently developed an AI-based method for analyzing material functions in a more quantitative manner. In their work published in Science and Technology of Advanced Materials: Methods, the team used topological data analysis and developed a super-hierarchical and explanatory analysis method for magnetic reversal processes. In simple words, “super-hierarchical” means, according to the research team, the connection between micro and macro properties, which are usually treated as isolated but, in the big scheme, contribute jointly to the physical explanation.

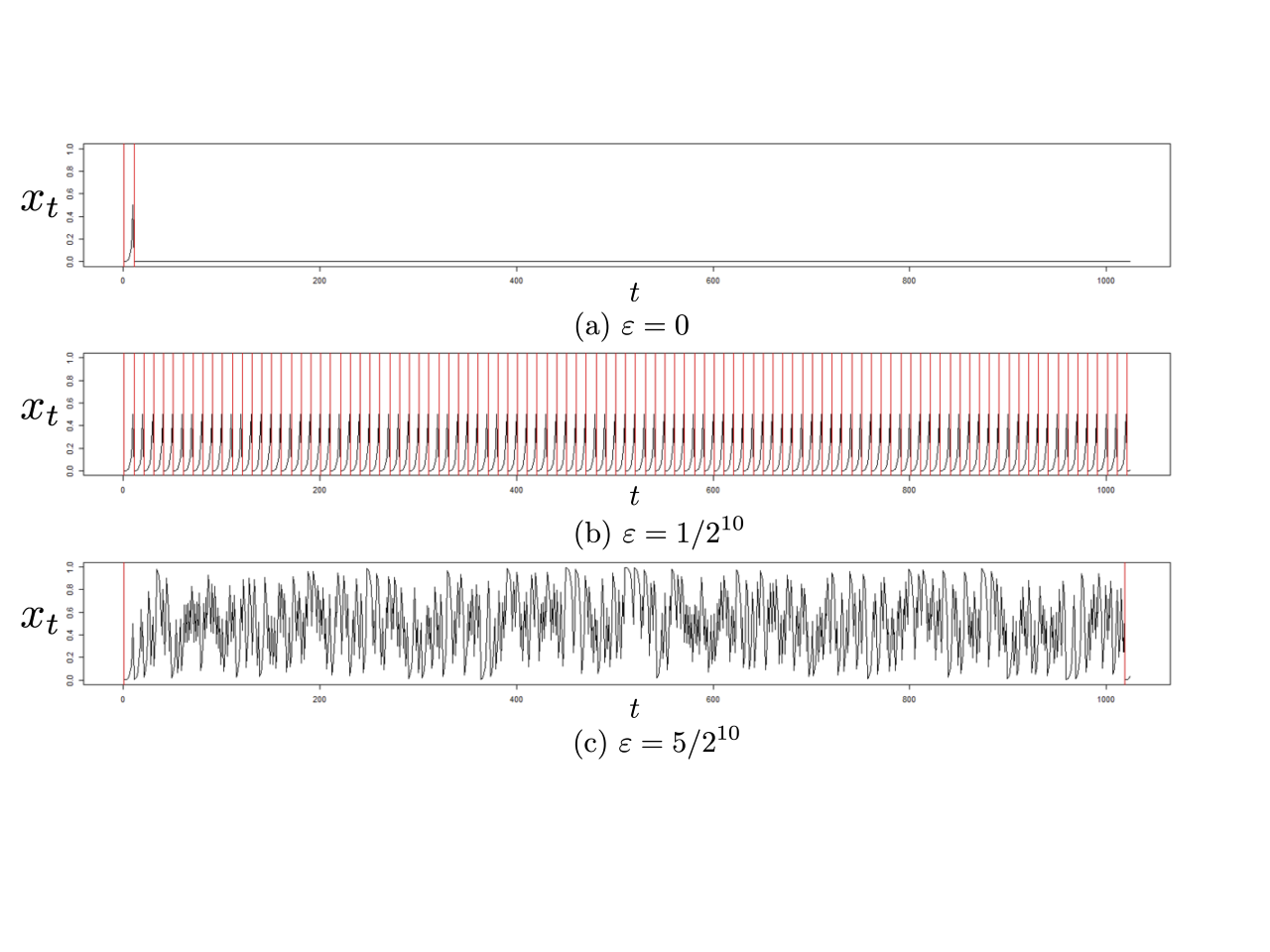

The team quantified the complexity of the magnetic domain structures using persistent homology, a mathematical tool used in computational topology that measures topological features of data persisting across multiple scales. The team further visualized the magnetization reversal process in two-dimensional space using principal component analysis, a data analysis procedure that summarizes large datasets by smaller “summary indices,” facilitating better visualization and analysis. As Prof. Kotsugi explains, “The topological data analysis can be used for explaining the complex magnetization reversal process and evaluating the stability of the magnetic domain structure quantitatively.” The team discovered that slight changes in the structure invisible to the human eye that indicated a hidden feature dominating the metastable/stable reversal processes can be detected by this analysis. They also successfully determined the cause of the branching of the macroscopic reversal process in the original microscopic magnetic domain structure.

The novelty of this research lies in its ability to connect magnetic domain microstructures and macroscopic magnetic functions freely across hierarchies by applying the latest mathematical advances in topology and machine learning. This enables the detection of subtle microscopic changes and subsequent prediction of stable/metastable states in advance that was hitherto impossible. “This super-hierarchical and explanatory analysis would improve the reliability of spintronics devices and our understanding of stochastic/deterministic magnetization reversal phenomena,” says Prof. Kotsugi.

Interestingly, the new algorithm, with its superior explanatory capability, can also be applied to study chaotic phenomenon as the butterfly effect. On the technological front, it could potentially improve the reliability of next-generation magnetic memory writing, and aid the development of new hardware for the next generation of devices.

Reference

DOI: https://doi.org/10.1080/27660400.2022.2149037

Title of original paper: Super-hierarchical and explanatory analysis of magnetization reversal process using topological data analysis

Journal: Science and Technology of Advanced Materials: Methods